WBEZ’s Dueling Critics greet their new robotic overlords (part 1)

Posted: July 2, 2012 Filed under: Uncategorized Leave a commentJust before I recorded my conversation with Kristian Hammond about applying insights from his machine-generated storytelling work to the Send My Robot project, I bumped into WBEZ’s dueling critics, Kelly Kleinman and Jonathan Abarbanel. When I told him that Hammond and his colleagues at Narrative Science had computer software that could write news stories, they wondered, half-joking, if they could be next. I told them not to be silly.

As it turned out, Hammond told me later that generating “Siskel and Ebert style” movie-review segments was old technology from his work at Northwestern University’s Intelligent Information Lab. A project called “News at Seven” had created a full-on newscast– anchored by animated cartoon avatars– that was entirely machine-generated.

And it included the movie review segment that Hammond had talked about.

So I arranged for the three of them to meet, in an on-air conversation on WBEZ’s 848, moderated by Richard Steele. Kelly posted some reflections– possibly a bit more alarmed than amused– to WBEZ’s site in anticipation.

I think the truth about the state of the art was a relief to Kelly and Jonathan, but the hints about where it”s going may have caused them a bit more concern.

Relief: News at Seven doesn’t actually review movies. It writes a script based on analyzing what other critics are saying about a movie on sites like Rotten Tomatoes and Metacritic.

And, as Hammond himself admits, the cartoon avatars are lousy performers– especially vocal performers. that darn text-to-speech technology still isn’t ready for prime time.

Concern: Hammond thinks that sending an actual robot to a play, and having it generate a review, could possibly work someday. The same technology that could make my robot work– assessing tone of voice, detecting movement– could allow a robot to sense when an audience laughs hard, seems to be crying, holds its breath in suspense.

And maybe– this is my speculation– generate a review that at least could tell you how strongly the audience might have been affected. (Think about those studies where scientists measure how turned-on human subjects get by looking at various kinds of porn…)

Here’s the audio from that conversation, if you want to hear how it went down.

… and after they left the 848 broadcast, Hammond and the critics kept the conversation going. I roped them into an adjoining studio and rolled tape. That’s part 2, coming later this week.

If robots can write news stories, why can’t they go to meetings for us?

Posted: July 2, 2012 Filed under: Uncategorized Leave a commentIf anybody can advise me on whether – and how – this could work, it’s Kristian Hammond. He’s a computer-science professor at Northwestern University, and he’s been studying artificial intelligence for more than 20 years. And at least two of the projects he’s worked on make him perfect for this robot thing.

One of them is a company called Narrative Science, where Hammond is Chief Technology Officer. They’ve been around a couple of years, and they’ve gotten a lot of attention for building software that writes — well, generates — stories, including news stories, based on data.

Like recap stories for Little League games, for example. Parents use an iPhone app to enter all the box-score data, and the software kicks out a writeup you can email to grandma.

The computer experts at Narrative Science work with journalists — and they develop detailed templates for stories, including ways to mine the box score data, to identify: “What’s the main idea here? What’s the lede?”

Here’s how one sample story begins: Cole Benner did all he could to give Hamilton A’s-Forcini a boost, but it wasn’t enough to get past the Manalapan Braves Red.

Narrative Science also trains its software to pick key moments to highlight, like the following: The inning got off to a hot start when Bullen singled, bringing home Cappola.

These are all real examples, and as you can tell, the Narrative Science guys give their machine a vocabulary of distinctive sports-writer verbs. (Another sample: The Amelia Bulldogs had no answer for Ringland, who cruised on the rubber. What does that one even mean?)

Narrative Science produced more than 300,000 Little League stories last year. For this year, Hammond says they’re on track to produce 2 million.

They also play in the big leagues: Narrative Science software writes quarterly-earnings previews stories for forbes.com.

(For example: Wall Street is high on Nike (NKE), expecting it to report earnings that are up 10.5% from a year ago when it reports its fourth quarter earnings on Thursday, June 28, 2012.)

Hey, wait: Does this thing already replace journalists?

Not exactly. As Hammond pointed out when we talked, no editor is going to pay a human reporter to cover a Little League game – “because how many people are going to read that story — twenty?” He said that covering stories that would otherwise be ignored is “emblematic” of their work in media.

“It’s not as though companies are saying, ‘Oh, we’re going to pull reporters or journalists off of a project,’” Hammond said. “It’s that, ‘We’ve never been able to do X. We’ve never been able to write earnings previews for ALL of the companies. We’ve never been able to cover all of the games.’”

Whew.

Good. This is pretty close to what I want: It does something I don’t think is worth my time. I don’t want to go cover a Little League game. Earnings-preview stories? They’re pretty dull too.

So that’s one of the projects that makes Hammond the guy to ask for advice on my robot.

The second one was a part of his core academic research — which is a big-picture inquiry into how people think – especially how they tell stories, and what makes stories compelling.

This project, called “Buzz,” trolled the blogosphere for compelling personal stories, then had two-dimensional cartoon avatars perform them like dramatic monologues. “Buzz” eventually went on display as an art installation with a grid of nine cartoon-avatar faces, taking turns to tell their stories. One would talk, and the others would turn to listen. That’s my meeting right there.

But another part of “Buzz” — finding the stories themselves — is also important for my project: My robot has to pay special attention when something interesting happens — maybe even alert me. How did Hammond and his colleagues train “Buzz” to identify interesting stories?

“It’s hundreds of cheap tricks,” Hammond said. “And none of them work well, but they work well in tandem. So we would look for excessive use of personal pronouns, words that had to do with relationships, emotionally impactful words.”

(For example, a sample story on the Buzz website begins this way: My husband and I got into a fight on Saturday night… and it ended in hugs and sorries. But now I’m feeling fragile.)

So: This guy’s got machines that can go someplace I don’t wanna go, pretend to listen, and actually pick out what might be interesting or important. I think we might be onto something.

What else could I get it to do? I sat down with Hammond to find out.

First request: I’d like it to be me – to speak in my voice, and, more importantly, learn how I behave in meetings. Maybe it would get to know me better than I know myself: Oh, Dan leans forward when this one topic gets mentioned. Or: When Dan is bored, he scratches himself in this funny way.

Hammond said this kind of thing is in the works. “Intel just announced that it’s putting a huge effort into machine-learning of individual behaviors,” he said. “The example being, if you forget your keys, and you do so habitually, the system will learn to remind you to find your keys and remember where you put them.”

Oh, yes.This is perfect, we need this at my house, my wife needs this. (I want it to say, kindly: “You know, you were wearing that blue jacket yesterday. Have you looked in the pockets there?”)

“In the long run,” Hammond said, “The computer is supposed to be a device that helps us in all aspects of our life and in order to help somebody really well, you have to know them.”

An empathetic machine?

“A knowledgeable machine,” said Hammond. “I hesitate to say empathetic.”

Did that idea – an empathetic machine – maybe creep him out a little bit?

“No,” he said. “I work on artificial intelligence. From the beginning of humans being able to think, we’ve always wanted to know how that works. And artificial intelligence is just saying, we need to know how that works at a level at which we can turn it into an algorithm. But the entire field of psychology is devoted to understanding humans. But you would never look at psychology and say, ‘Wow! Psychology’s creepy.’”

When I steered Hammond back to my selfish and mercenary project, I got a nice surprise:

“I think you should build it,” he said. “I really do. It’s absolutely something that should be built.”

Yes! There was, of course, a word of caution:

“I gotta tell you,” Hammond said. “You miss enough of these meetings, and they’re gonna stop inviting you.”

Well, that would be perfect, wouldn’t it? And what a selling point: You don’t even have to buy the machine – just rent it until they stop inviting you to the meetings.

I am totally building this thing.

P.S. When this story aired on WBEZ in June 2012, Hammond joined the station’s “dueling critics”– theater reviewers Kelly Kleinman and Jonathan Abarbanel– on the morning talk show “848” to discuss the question: Could computers replace them?

Answer: In part, that’s old technology. Kleinman’s pre-show write-up of her concerns is here.

The conversation itself, in which “WBEZ’s Dueling Critics Meet their New Robotic Overlords,” is here:

Help wanted: Young robot experts

Posted: February 28, 2012 Filed under: Uncategorized Leave a commentI need your help to build a DIY “telepresence” robot– basically Skype on a Roomba. I call them “skip-the-commute” robots, where mine is going to be more of a “skip-the-meeting” thing. A tele-absence robot.

But I’ve gotta get on top of telepresence first: My robot will have to perform at least as well as– and double up as–a telepresence model; otherwise, colleagues will know for sure that I’m never “there,” operating the thing.

Various companies produce high-end versions — anybot is probably the best-known. The highest-end example I’ve found is a company called InTouch Health, which produces “medical telepresence” robots.

(I’m actually going to Loyola University Medical Center tomorrow, to see one in action– doing a visit in the pediatric ward. They also have one working with a neuro-surgeon, but for my purposes, the pediatrician is probably the best test-case: If a robot can comfort a sick, vulnerable child, then it should do a fine job keeping my colleagues company.)

And the Roomba people– the iRobot Corporation– just unveiled an awesome “next-generation” robot at the Consumer Electronics Show in January: AVA ups the ante by (a) using a tablet computer for her “head”— the software is an app for iOS and Android–and (b) being able to navigate a room on her own.

I’m going to Texas for SXSW next month, and I’ll be missing some of the classes I teach at Columbia College while I’m away. … so this seems like a great opportunity to test out telepresence: Could I teach a class via robot?

I asked AVA for a date, but she’s busy. Similarly, the InTouch Health robot isn’t anywhere near Chicago that week.

But just last week, a guy in California named Johnny Lee Chung posted instructions for making a DIY version— using a modified roomba kit (called iRobot Create) and a netbook. Total cost is about 1/20th what the big guys charge for theirs.

It’s super cool. And it looks pretty easy to do. But– and this is just between you, me and the Interwebs– I actually know nothing about building things. Or writing code. Or anything.

Or, as Groucho Marx said, “Why a child could figure this out! Quick, somebody find me a child. I can’t make head or tail of it.”

I know there are robotics clubs all around the city with incredible robot-building kids. But I don’t know them! Do you? If so, please have them get in touch! I’m [send my robot] {AT} Gee, male {DOT] com.

Many thanks!!

You, Robot in the house. (Virtually, anyway.)

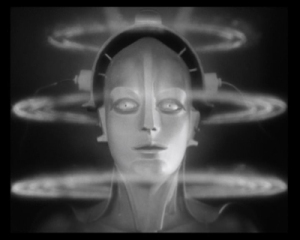

Posted: February 17, 2012 Filed under: Uncategorized Leave a commentHi. Nice to meet you! I think we’re going to get along just great.

Do you think this profile picture makes me look fat?

Or spooky?

It’s OK, you can be honest with me.

Hey, did I mention that I’m on Twitter? @sendmyrobot, of course!

See you there.

And if there’s anything you need, just ask. I am here to serve.

Question 1: How realistic should my robot look? (Answer: Don’t go overboard.)

Posted: February 17, 2012 Filed under: Uncategorized Leave a commentMy to-do list for this year: Create invention. Change the world. Get rich.

The invention: A robot that goes to meetings for you.

Changing the world: In addition to freeing up a load of time, this invention will prevent a ginormous number of needless conflicts. (If you hadn’t been at the meeting, you wouldn’t have responded to your colleague’s idiotic comment, and we’d all be better off, right?)

Getting rich: This part seems obvious, since everybody needs one.

So, job one: Major R&D. How convincing can this robot be?

Time to call Malcolm MacIver, a Northwestern University scientist who consulted with the producers of the Battlestar Galactica prequel Caprica. In other words, he’s one of the guys Hollywood people call when they want to know, “How do we make this robot really lifelike?”

And he’s built these crazily-awesome robotic fish. (They even sing.) But fish–even singing fish–don’t go to meetings.

MacIver tells me about a project by a colleague of his in Japan, Hiroshi Ishiguro. “He had the Japanese movie-making industry create a stunningly-accurate reproduction of him,“ MacIver says. “So he can send his physical robot to a meeting and it will smile and furrow its brow—and talk through his mouth.”

How accurate are we talking about? “It’s realistic enough that he doesn’t want to show his young daughter,” MacIver says, “because he thinks it would creep her out.”

Wow. So, is this Ishiguro guy beating me to market? No, as MacIver describes things, Ishiguro uses his robot for pure research.

Specifically, Ishiguro studies non-verbal elements of communication “by disrupting them,” says MacIver. “So you can say, ‘OK, I’m going to shut off eyebrow movement today, and how does that affect people’s ability to understand what I’m talking about?’ You know, are they still able to get the emotional content?”

So, back to stunningly-accurate: Ishiguro’s robot would creep out a three year old… but does it fool his adult research subjects? Would it fool my colleagues, if I left eyebrow-movement switched on?

Not so much, says MacIver.

What if he just got a much, much bigger grant? “Um, unlikely,” MacIver says.

OK: super-lifelike, no-go.

Moving on…

There’s a robot that listens really well. It can kind of convince you that it’s listening to you. When I saw the YouTube video, it looked like WALL*E.

It had these big goggle eyes that would bug out a little bit. It nods, makes eye contact, responds emotionally to you. The point of the experiment was kind of heartbreaking: Could you make old people in nursing homes less lonely, if they had someone to listen to them, and would this do it?

And even for ten seconds, watching this guy in the lab coat, you think: Yeah, maybe.

So, I tell MacIver, now I’m starting to think that the robot should be a cartoon version of me.

“Well, right, that’s a good point,” he says. “If you can’t do it perfectly, go to the other side of the uncanny valley and and you’ll be more effective.”

The “uncanny valley” turns out to be this phenomenon where, when animated characters—or robots– get too real-looking, they become creepy. Like in the 2004 movie, The Polar Express.

Lawrence Weschler explained it this way in a 2010 interview with On the Media:

If you made a robot that was 50 percent lifelike, that was fantastic. If you made a robot that was 90 percent lifelike, that was fantastic. If you made it 95 percent lifelike, that was the best – oh, that was so great. If you made it 96 percent lifelike, it was a disaster. And the reason, essentially, is because a 95 percent lifelike robot is a robot that’s incredibly lifelike. A 96 percent lifelike robot is a human being with something wrong.

So: I want a cartoon avatar.

That’s one question down, but there’s a lot more R&D to do. Next, I think I need to talk with some Artificial Intelligence specialists…

… to make sure that the robot knows what to say if someone in the meeting asks “me” a question.

Our mission: Change the world, get rich.

Posted: January 31, 2012 Filed under: Uncategorized Leave a commentChanging the world: In addition to freeing up a crapload of time… this will prevent a ginormous number of needless conflicts.

If you hadn’t been at the meeting, you wouldn’t have responded to your colleague’s idiotic comment, and we’d all be better off, right?

Getting rich: Obviously, everybody needs one.